Biological neural networks are large systems of complex elements interacting through a complex array of connections.

How do we describe and interpret the activity of a large

population of neurons and how do we model neural circuits when:

o individual neurons are such complex elements and

o our knowledge of the synaptic connections is so incomplete?

A review paper by L.F. Abbott

Center for Complex Systems

Brandeis University

Waltham, MA 02254

Published in Quart. Rev. Biophys. 27:291-331 (1994)

Read the rest of this entry…

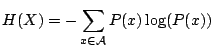

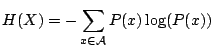

Information theory quantifies how much information a neural response carries about the stimulus. This can be compared to the information transferred in particular models of the stimulus-response function and to maximum possible information transfer. Such comparisons are crucial because they validate assumptions present in any neurophysiological analysis.

The authors review information-theory basics before demonstrating its use in neural coding, validating simple stimulus-response models of neural coding of dynamic stimuli.

By Alexander Borst & Frederic E. Theunissen

Nature Neuroscience 2, 947 – 957 (1999)

doi:10.1038/14731

Read the rest of this entry…

The nervous system represents time-dependent signals in sequences of discrete action potentials or spikes, all spikes are identical so that information is carried only in the spike arrival times.

A scientific paper by Steven P. Strong, Roland Koberle, Rob R. de Ruyter van Steveninck, and William Bialek

-NEC Research Institute, Princeton, New Jersey

-Department of Physics, Princeton University, Princeton, New Jersey

Read the rest of this entry…

Shannon mutual information provides a measure of how much information is, on average, contained in a set of neural activities about a set of stimuli. It has been extensively used to study neural coding in different brain areas. To apply a similar approach to investigate single stimulus encoding, the authors need to introduce a quantity specific for a single stimulus.

A scientific paper by Michele Bezzi

Accenture Technology Labs, Sophia Antipolis, France

Read the rest of this entry…

Neurobot via RSS

Neurobot via RSS